One Million Frames per Second (work in progress; concept phase)

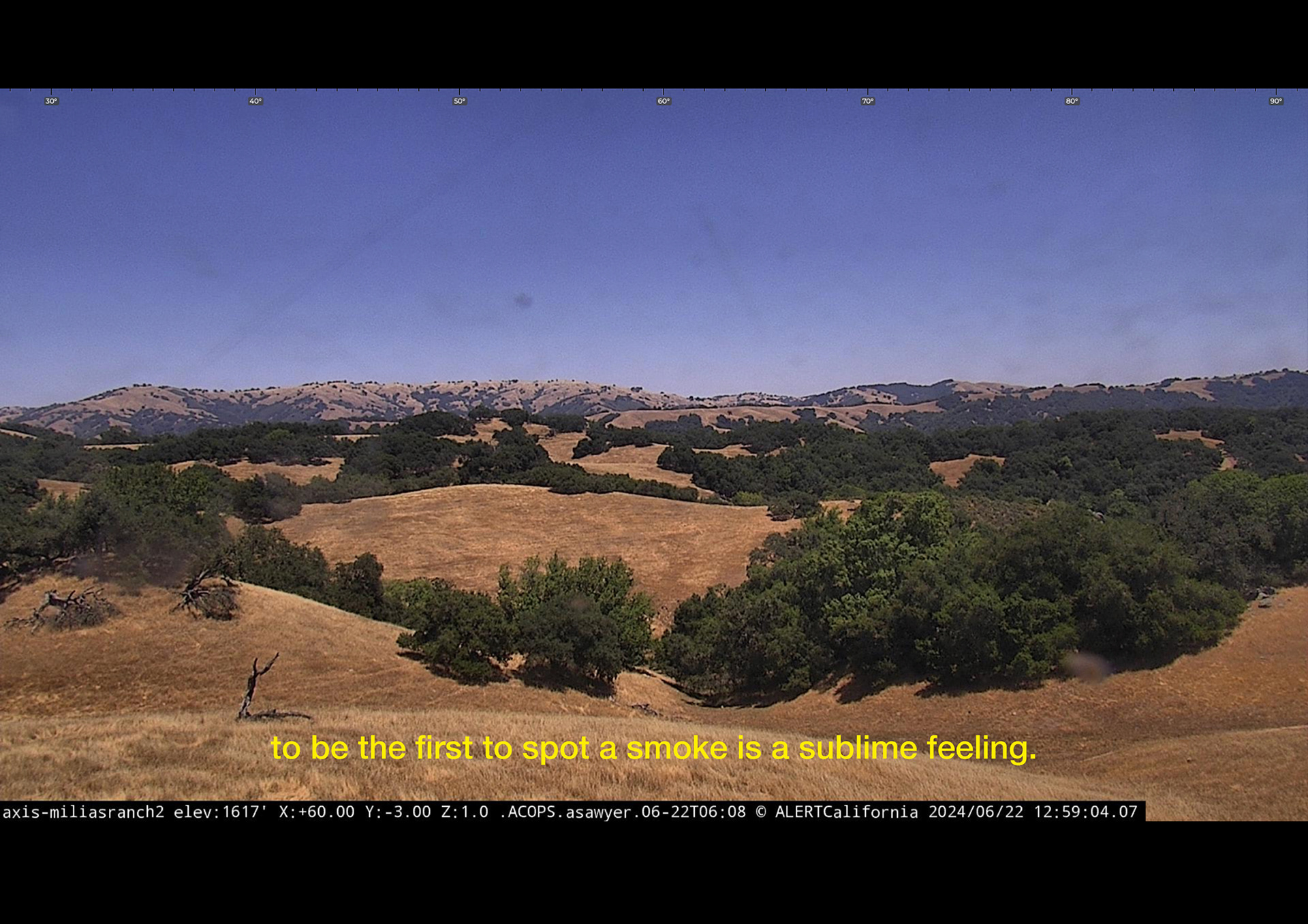

In response to the ecological crisis, automated photography and artificial intelligence are increasingly applied across various fields, for example to monitor and prevent wildfires. Historically, fire detection relied on human observers—known as fire lookouts—stationed in observation towers. Today, this role has largely been replaced by digital surveillance systems. These cameras generate billions of images, which are not intended for the human eye and are rarely, if ever, seen.

Photographic images are used to "teach machines to see" by training them to recognize and evaluate visual patterns. This builds the foundation for machine vision systems capable of making automated decisions. Intelligent programs that operate automatically are increasingly integrated into diverse applications, shaping our lives and decision-making processes. How does machine vision differ from a human perception and what insights can be derived from the vast output of those images?

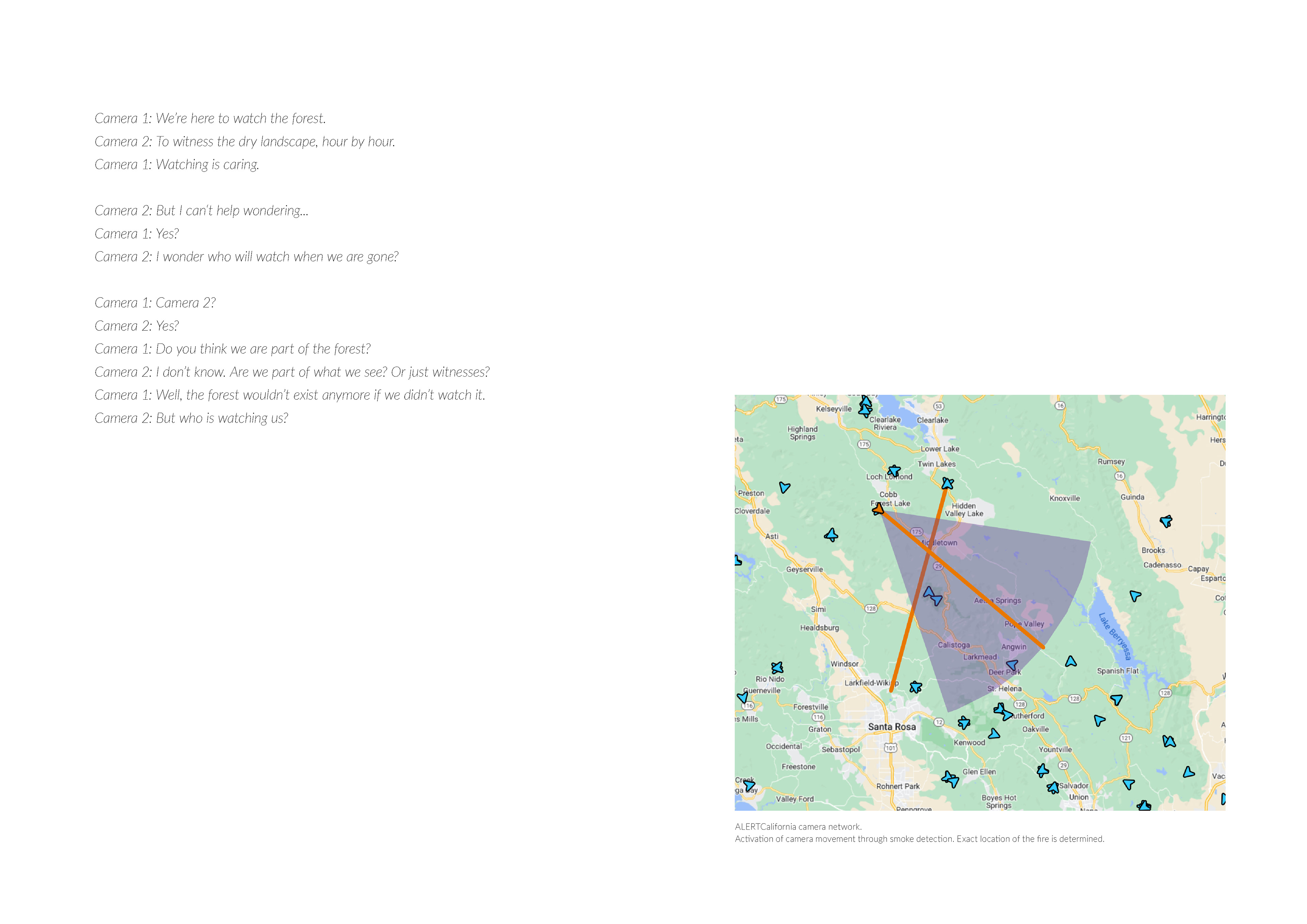

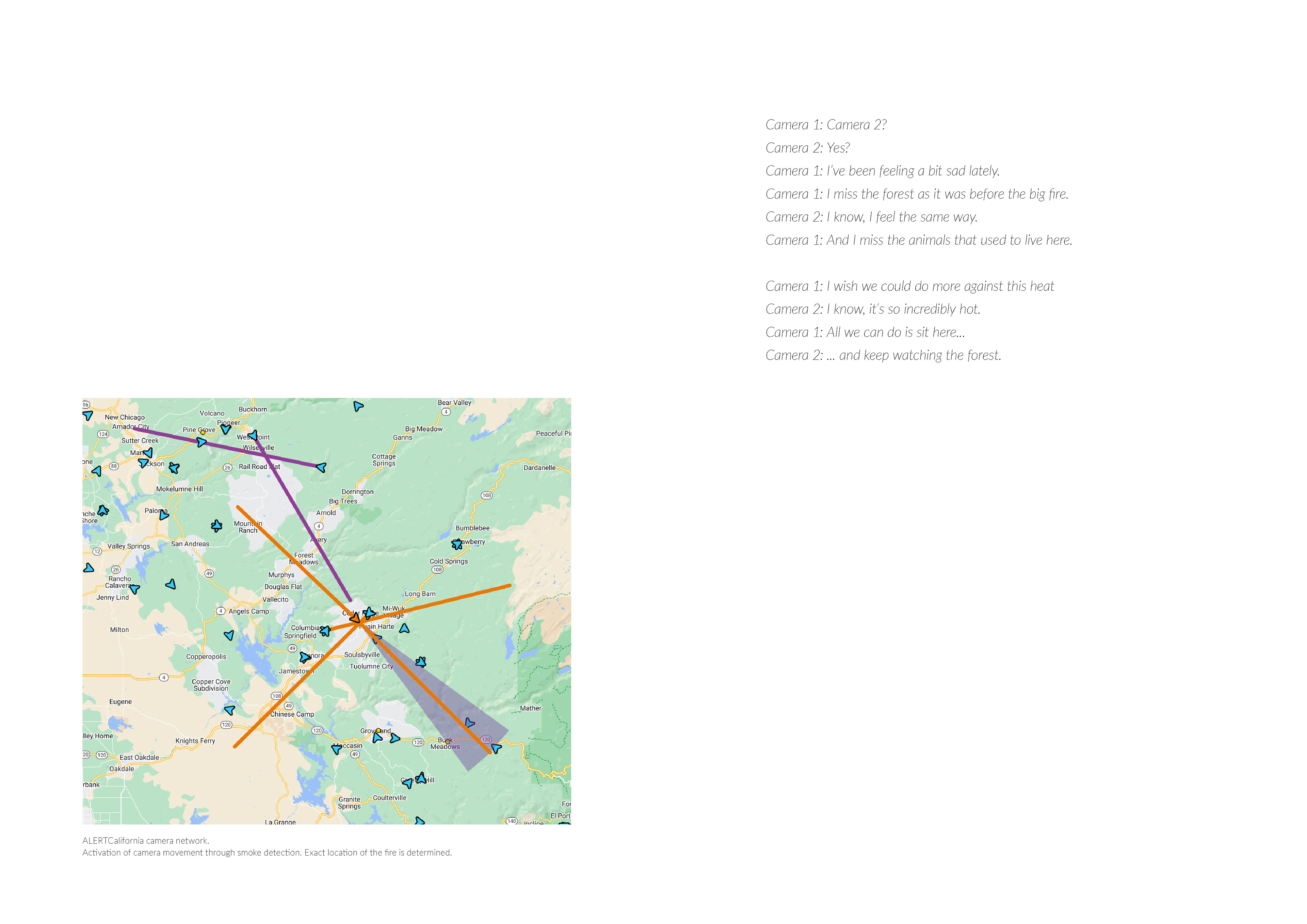

In 2023 ALERTCalifornia launched a project that utilizes artificial intelligence to scan landscapes and provide early warnings of emerging wildfires. The system consists of over 1,000 high-resolution 360-degree cameras distributed across California, capable not only of detecting smoke but also of predicting the potential spread of fires. These cameras are interconnected, automatically adjusting their perspectives in response to one another.

In “One Million Frames Per Second”, the automated camera network of ALERTCalifornia is anthropomorphized. The cameras are not merely seen as passive observers but as entities that develop distinct personalities and voices. As the narrative progresses, their perspectives become more autonomous and critical, questioning their function as surveillance system. Adopting a documentary approach, we aim to examine how forests are visually rendered through machine perception –before, during, and after wildfires – offering a holistic, 360-degree perspective.